Author

Published

29 Jun 2022Form Number

LP1616PDF size

5 pages, 240 KBLenovo is committed to supporting MLPerf

As a leader in Artificial Intelligence, Lenovo is committed to supporting MLPerf, helping customers make better-informed decisions for their AI workloads.

MLPerf is a consortium of AI leaders from academia, research labs, and industry. Its mission is to "build fair and useful benchmarks" that provide unbiased evaluations of training and inference performance for hardware, software, and services—all conducted under prescribed conditions.

Lenovo understands the complexity of deploying AI solutions that solve real business challenges. For example, AI is dramatically transforming retail. Some key AI trends in retail and real-life applications where retailers are using AI, including

- Up to 4X increase annually for the next three years in autonomous shopping, such as autonomous checkout and NanoStores, a wholly automated convenience store

- Over $100B in annual shrinkage using AI for loss prevention, including ticket switching, mis-scanning, and security

- Up to 50% of top retailers use AI for store analytics to disclose demographic analysis, customer engagement, price matching, and stock-outs.

Choosing the best infrastructure for your workload should not be a barrier to your AI Initiatives. Fundamentally, Lenovo is making continual progress with supporting more models and benchmarks in the MLPerf portfolio. This momentum includes extending and enhancing our AI infrastructure portfolio so customers can make decisions with better, faster insights. Our goal through MLPerf Inference 2.0 - Training is to bring clarity to infrastructure decisions so customers can focus on the success of their AI deployment overall.

MLPerf 2.0 highlights

Some recent highlights supporting the MLPerf 2.0 efforts include:

- Expanded Lenovo infrastructure systems testing (seven inference and three training)

- Support for NVIDIA A100 Tensor Core GPUs on MLPerf Inference 2.0 AI Training Benchmark

- Engineering enhancements with Lenovo Neptune, including liquid-cooled CPUs, GPUs, DIMMs, and storage

- Optimized Lenovo Server for AI

- Lenovo ThinkSystem SR670 V2 with 8x 80 GB A100

- Lenovo ThinkSystem SR670 V2 with 4x SXM A100 (80GB/500W)

- Lenovo ThinkSystem SD650-N V2 with 4x SXM A100 (80GB/500W)

- Expanded benchmark submission, now including MiniGo score for Reinforcement Learning

- Improved Performance: Lenovo achieved the best scores for 4xSXM servers for MaskRCNN (Object Detection) and 3D-Unet (medical imaging)

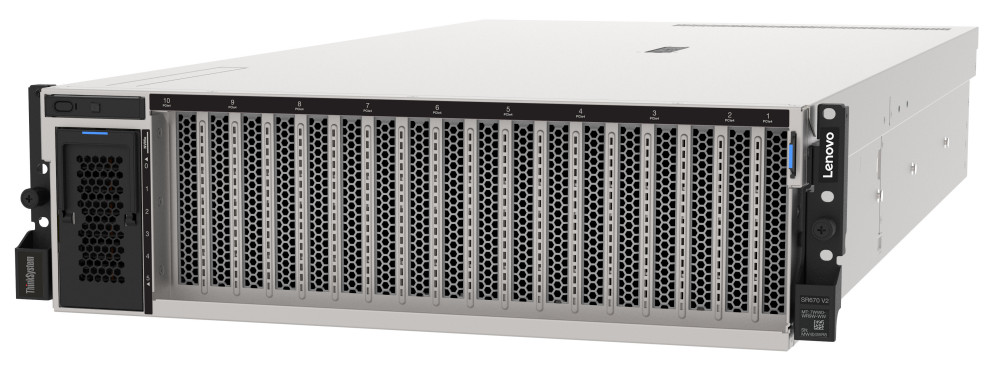

Figure 1. Lenovo ThinkSystem SR670 V2 configured to support eight NVIDIA A100 GPUs

MLPerf results

Lenovo achieved the following improvements in comparison with previous rounds:

- 3% in Resnet score (SXM Server)

- 9% in MaskRcnn score (SXM Server)

- 18% for PCIe

- 42% in RNN-T (Recurrent Neural Network – Transducer)

Lenovo demonstrated AI performance across various infrastructure configurations, including NVIDIA A16 GPUs and NVIDIA Triton™ Inference Server, running on Lenovo ThinkSystem platforms with liquid-cooled CPUs. We also showcased the efficiency and performance of our air-cooled systems like the SR670 V2, providing both PCIe and HGX deployment options in a standard data center platform that enterprises of all sizes can quickly deploy.

These engineering feats reinforce the performance benefits of Lenovo's ThinkSystem Servers with Neptune™ that delivers Exascale grade performance in a standard, dense data center footprint.

Lenovo and NVIDIA

Lenovo and NVIDIA collaborate extensively in Artificial Intelligence. We partner through Lenovo AI Innovation Centers, where we work to ensure the success of our mutual customers with their AI initiatives. Here, customers get access to Lenovo and NVIDIA AI experts to consult on projects, the proper infrastructure to run a proof of concept, and proof of ROI before deployment.

We also collaborate with the Lenovo AI Innovators program, where we have multiple AI software companies whose codes are optimized to support Lenovo servers with NVIDIA GPUs.

For more information

For more information, see the following resources:

- Explore Lenovo AI solutions:

https://www.lenovo.com/us/en/servers-storage/solutions/analytics-ai/ - Engage the Lenovo AI Center of Excellence:

https://lenovoaicodelab.atlassian.net/servicedesk/customer/portal/3

MLCommons®, the open engineering consortium and leading force behind MLPerf, has now released new results for MLPerf benchmark suites:

- Benchmark results: https://mlcommons.org/en/training-normal-20/

- Latest news about MLCommons: https://mlcommons.org/en/news/mlperf-training-2q2022/.

Trademarks

Lenovo and the Lenovo logo are trademarks or registered trademarks of Lenovo in the United States, other countries, or both. A current list of Lenovo trademarks is available on the Web at https://www.lenovo.com/us/en/legal/copytrade/.

The following terms are trademarks of Lenovo in the United States, other countries, or both:

Lenovo®

Neptune®

ThinkSystem®

Other company, product, or service names may be trademarks or service marks of others.

Configure and Buy

Full Change History

Course Detail

Employees Only Content

The content in this document with a is only visible to employees who are logged in. Logon using your Lenovo ITcode and password via Lenovo single-signon (SSO).

The author of the document has determined that this content is classified as Lenovo Internal and should not be normally be made available to people who are not employees or contractors. This includes partners, customers, and competitors. The reasons may vary and you should reach out to the authors of the document for clarification, if needed. Be cautious about sharing this content with others as it may contain sensitive information.

Any visitor to the Lenovo Press web site who is not logged on will not be able to see this employee-only content. This content is excluded from search engine indexes and will not appear in any search results.

For all users, including logged-in employees, this employee-only content does not appear in the PDF version of this document.

This functionality is cookie based. The web site will normally remember your login state between browser sessions, however, if you clear cookies at the end of a session or work in an Incognito/Private browser window, then you will need to log in each time.

If you have any questions about this feature of the Lenovo Press web, please email David Watts at dwatts@lenovo.com.